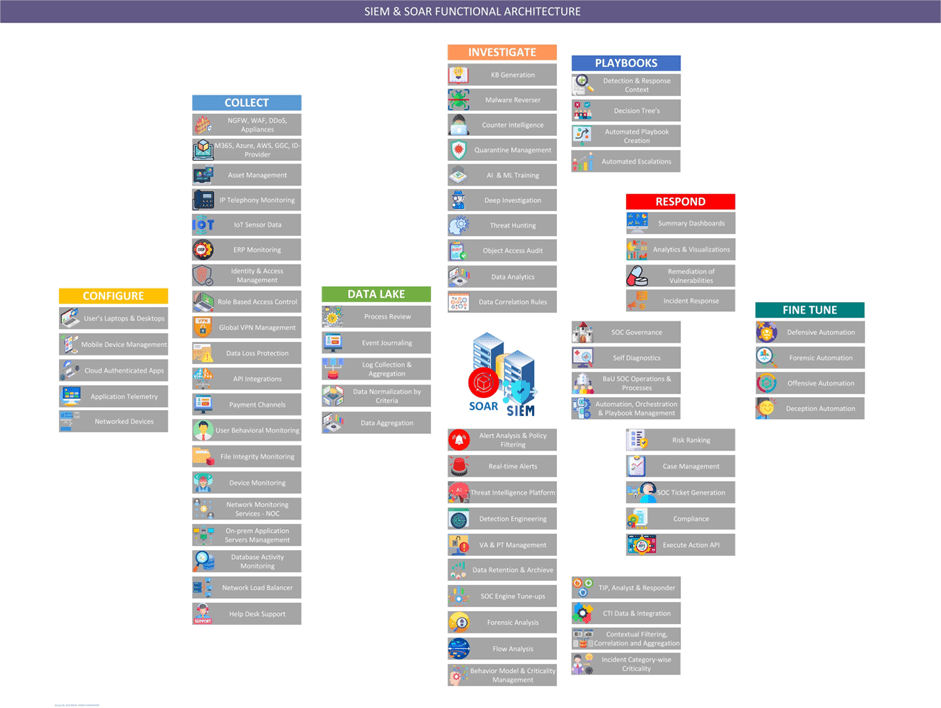

The below picture illustrates operational architecture of the SIEM & SOAR in an integrated function:

This is where the big picture comes in, from ingress to egress. As you can see in the picture the data collectors need to be configured in each device, either by agent or agentless or by default the OS or firmware has data plane, management plane and console plane pre-configured, and if you have the ERP or identical solution in place, they most likely have some sort of API or service wise and identifiable services that can be automatically scanned, configured to generate and produce actionable logs that can be fed into the SIEM & SOAR combined.

Image: developed by Shahab Al Yamin Chawdhury

Now, that you have configured your data or log shipping to a central repository, you should have a data retention plan of how many days you need to keep them or to append them in a certain day or not. As it will prove to be a serious burden in longer times. When SIEM gets its hands on the logs, it starts correlations, and types of events are grouped together, to have a more meaningful insight. As the SIEM starts you will get a burst of events populated, don’t worry, apply those visibility rules for data correlations. Ingestion rules will minimize the log correlations, and only when required, enable, or disable certain rules which is not required. Do remember, approve all documents, as the moment you have raised things for approval, it would be known to the SOC manager and to the SOC director, the moment you will not be asked or been accountable anymore.

Afterwards, SIEM and SOAR will continuously check for the rules for flow analysis, and will gather information for review and detection engineering takes place for the notifications, real-time alerts may take place or the event will go through alert analysis and policy filtering, if the event is known or unknown kind. Data analysis finalized by deep investigation and a managed orchestration takes place within the integrated SIEM and SOAR to produce actionable results, and a case is generated with all of OSINT data, correlations, attack type mapping, and compliance mapping with kill-chain and actionable content put together for the analysts to take on a deeper investigation. Playbooks are then initiated for a manual case investigation, and by type, the rollout takes place. The identified source and its data can be quarantined in this phase should it required. A ticket gets generated with a severity class, hash data reviewed, remediated, and action API gets executed for KB generation and if a tune-up required for the data aggregation, it flags for a revisiting of rules for the defensive, offensive, forensic and deception automation services.

Any thoughts on disaster recovery on your SOC?

Since you are going to deploy a SOC, how would you deploy these servers? Standalone mode? You should at least have multiple servers in HA mode with either in OS cluster or service cluster mode. I would recommend for the OS cluster mode and have a separate DB cluster as well for faster indexing and R/W requirements.